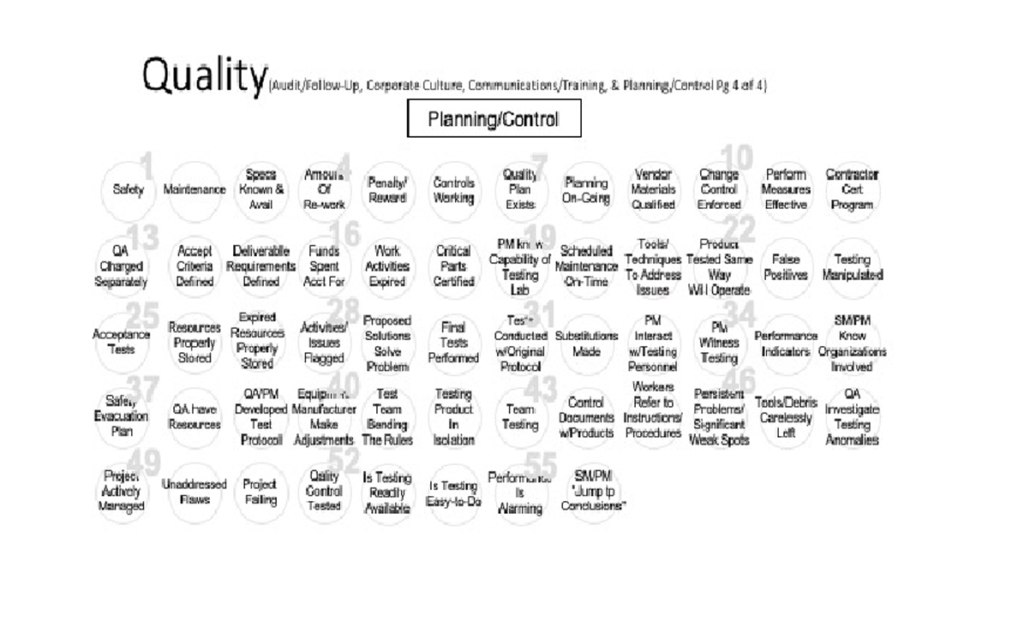

PLANNING/CONTROL

1 – Have safety/ cyber matters been adequately addressed?

2 – Have maintenance concerns been properly addressed?

3 – Are specifications known and available?

4 – Is a large amount of re-work being performed?

5 – Are penalties/ rewards given for inappropriate/exemplary performance?

6- Are the project controls working?

7 – Are quality controls in-place for this project?

8 – Is quality planning ongoing?

9 – Are vendors/ suppliers materials qualified (screened) before acceptance?

10 – Is change control enforced?

11 – Do performance measures exist for every part of the project?

12 – Does the contractor have a certification program?

13 – Vendor is charging firm separately for quality control/quality assurance?

14 – Is acceptance criteria properly defined before the project is completed?

15 – Are deliverable requirements clearly defined?

16 – Funds spent on contracts are fully accounted for? Knows amount spent on each/ amount still due?

17 – Are products/ services or licenses/ qualifications/ work activities expired – but still in use? (Yes = bad)

18 – Are critical parts certified with:

*Who made it?

*The tools used? Is a tool tracking system used?

*Where made?

*How tested to meet the standards

*Which standard(s)/ was (were) used?

19 – Does PM know the capability, type and quantity of things the testing lab is able to test?

20 – Does PM know the number and percent of equipment that has received scheduled maintenance on-time? (No = bad)

21 – Are formally accepted problem solving tools/techniques used to address issues? (Yes = good).

22 – Is the project’s product/service being tested in the same way it will operate in the field under various/ extensive real world conditions? Are field tests producing results that are as good as the research? (Yes = good)

23 – Is the project’s testing producing a high rate of false positives? (Yes = blue)

24 – Is the testing process being improperly manipulated (e.g., subtly altering the data)? (Yes = bad)

25 – Are acceptance tests done on all critical items? (No = bad)

26 – Does PM ensure that resources are properly stored ? (Yes = good)

27 – Does PM ensure that expired resources are properly disposed of? (No = bad)

28 – Are essential activities/ issues flagged as safety-critical identified and timely addressed? (No = bad)

29 – Will proposed solutions both solve the current problem and avoid worst/ future or (switching) problems? (No = bad)

30 – Are final tests performed to prevent improper corrections, before products are sent to the customer? (Yes = good)

31 – Are current tests being conducted with the original testing protocol? (No = bad)

32 – Have any substitutions been made away from the original protocol? (No = good)

33 – Does PM interact with testing personnel? (No = bad)

34 – Does PM actually witness the testing? Critical testing? (No = bad)

35 – Is SM/ PM taking appropriate steps to ensure that performance indicators are not being manipulated to improve/ distort performance (e.g., misclassification/ false advertising)? (No = bad)

36 – (If a problem exists) Does SM/ PM know how big is the problem how many agencies or organizations are involved or tainted it? (No = bad)

37 – Does project plan have a safety evacuation plan that covers all workers who work on the site? (No = bad)

38 – Does quality assurance have the resources and the specialists to keep up with the work that’s being done?

39 – Has QA/PM/ manufacturer developed a test protocol to outline how to test the system? (Yes = good)

40 – Does QA/ PM give the equipment manufacturer(s) the opportunity to make adjustments to make their system(s) more effective? (Yes = blue)

41 – Is test team bending the rules? (See examples)

*Is the performance in the field completely/ significantly different than the technical data?

*Are enough tests being completed?

*Are the proper standards being used? (perhaps an older/ outdated/ non-applicable standard is being used?)

*Is a different configuration being tested than the actual product to be deployed at the end of the test?

42 – Has/ is team testing the entire product/ system, in isolation? End-to-end? As part of an integrated system? (Yes = good)

43 – Is/ has team testing the entire product/ system with the other systems/ products it will operate with? (Yes = good)

44- Are project work control documents co-located with products/ components on the production line? (No = bad)

45 – Do production workers refer to their instructions/ procedures as work is being performed, before work is started or when it is completed? (No = bad)

46 – Is the testing finding any persistent problems/ significant weak spots? (Yes = bad)

47 – Does the QA process include ensuring that tools/ debris are not carelessly left inside the product? (Yes = good)

48 – Does QA/ test team investigate testing anomalies (e.g., minute variations) as well as exact match results? (Yes = good)

49- Has the project been actively managed at all stages to ensure requirements are met? (Yes = good)

50– Will any of the identified unaddressed flaws create significant risk (loss of/ potential loss of life, equipment) if left unfixed? (Yes = bad) Has estimated completion times (e.g., hours, days) been determined? (No = bad)

51 – Is project currently failing during testing under conditions when it will be needed most, once deployed in the future? (Yes = bad)

52 – Is the quality control system intentionally tested by using false data to confirm that the system would flag (catch) future problems? Are corrective actions taken to address the deficiencies? (Yes = good)

53 – Is testing readily available? (Yes = good)

54 – Is testing easy-to-do? (Yes = good)

55 – Is it easy for testing and testers to determine if performance is ‘alarming’? (Yes = good)

56 – Is SM/ PM prone to ‘jump to conclusions’ or ‘assume the worse’ BEFORE seeking out alternative solutions which could possibly resolve the situation? (Yes = bad)

Project Risk Assessment and Decision Support Tools