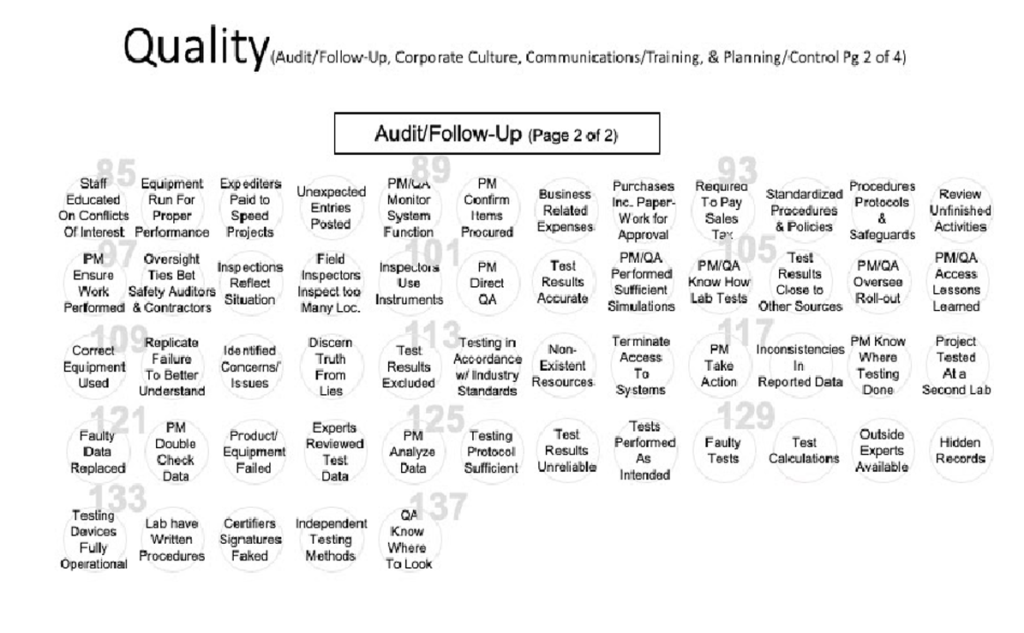

Audit/Follow up (Con’t)

85 – Is the staff educated regarding conflicts of interest? (No = bad)

86 – Was equipment run enough times to ensure proper performance in accordance with specifications? (No = bad)

87 – Are expediters paid to speed projects for approval? (Yes = blue)

88 – Are unexpected entries posted by unexpected people at odd times such as weekends or posted late? (Yes = bad)

89– Does PM/QA have a way to monitor in real-time whether the product/ system is functioning properly and meeting regulations/ following processes? (Yes = good)

90- Does the project manager confirm or review that the items to be procured on a shopping list and only the items on the shopping list are purchased? (Yes = good)

91 – Are policies in place to ensure that all charges are for business related expenses? (Yes = good)

92 – Do purchases include paperwork to indicate prior approval? (Yes = good)

93 – Is project required to pay sales tax? If documentation proves ‘no’ is project paying taxes anyway? (Yes – blue)

94 – Are standardized procedures and policies in place? (No = bad)

95 – Can procedures, protocols and safeguards be quickly changed, when required? (Yes = good)

96 – Does PM review unfinished activities associated with this project? (No = bad)

97 – Does PM ensure (by some effective means) that all work is performed properly? (Yes = good)

98 – Is there sufficient oversight of the ties between safety auditors and contractors/ clients to avoid potential conflicts of interests? (Yes = good)

99 – Do inspections reflect the actual situation, if objectives are not being met? (No = bad)

100 – Are field inspectors required to inspect too many locations, to be effective? (Yes = bad)

101 – Do/ did inspectors use instruments and professionally recognized criteria to confirm that equipment/ service is operating within the minimum safety limits? (Yes = good)

102 – Does PM direct QA to perform regular safety inspections? (Yes = good)

103 – Are test results accurate? (Yes = good) Are any test results done at the very edge of the product’s performance maximum/ minimum ranges (envelope)? (No = blue)

104 – Has PM/ QA performed sufficient simulations of the product/ service before roll-out?

105 – Does PM/ QA know how the lab does its testing? (No = bad)

106 – Does the project’s test results come comparatively close to other sources test results? (No = bad)

107 – Does PM/ QA oversee the roll-out of the project’s technology before delivery to the customer? (Yes = good)

108 – Does PM/ QA maintain/ have access to a lessons learned database which: Summarizes and distributes meeting minutes? Compile a list of incidents? Recommendations for improvement? Track the completion status of recommendations and their deadlines?

109 – Is/ was the correct equipment used to test the end product? (Yes = good)

110- When test failures occur – is there any attempt to replicate that failure to better understand how it occurred? (No = blue)

111– Has the management team fully accepted and addressed previously identified concerns/ issues? (No = bad)

112 – Can/ has SM/PM discern truth from lies? (No = bad)

113 – Are any test results excluded from the reliability calculations? (Yes = bad)

114 – Is all testing and evaluation being performed in accordance with industry standards? (Yes = good)

115 – Is SM/PM paying for non-existent (phantom) resources (shell companies/ personnel/ invoices/ equipment, etc.)? (Yes = bad)

116 – Has SM/ PM implemented systems to terminate access to systems, resources, etc to people who leave the project/ company? (Yes = good)

117 – Has/ does PM take action (if required) as soon as test results are in/ problems are uncovered? (Yes = good)

118 – Are there inconsistencies in the reported data? (Yes = bad)

119 – Does PM know where the actual testing is being done/ performed? (No = bad)

120 – (If possible) Has PM had project tested at a second laboratory/ independent lab in addition to the initial testing laboratory? Are the results similar? (Yes = good)

121 – Does PM ensure that faulty data is replaced once it has been discovered? (Yes = good)

122 – Does PM:

*Double check the data that comes across her/his desk? (Yes = good)

*Ask team to identify and evaluate and verify the reliability data source? (Yes = good)

*Have team to evaluate the methods used to gather the data? (Yes = good)

*Explain to team – why s/he wants to evaluate certain information? (Yes = good)

*Request:

*Divergent/ clashing facts/ facts from diverse sources

*Different facts that support different conclusions (but are still rooted in reality)

123 – Has product/ equipment failed a number of real-life tests? (Yes = bad)

124 – Have qualified experts seen and reviewed the test data? (Yes = good)

125 – Has/ does PM make time to analyze the data to establish confidence in the results? (No = bad)

126 – Is the current testing protocol sufficient? (Yes = good) If protocol is insufficient – has additional testing been added to establish accuracy? (Yes = blue)

127 – Are tests results often unreliable due to: (Yes = red)

*Being skewed? Inconsistent? Inaccurate?

*Human errors?

*Entered incorrect data?

*Used outdated materials/ components?

*Poor oversight?

*Improper calibration?

*Lacking technical/ management competence/ expertise? Inexperienced?

*Poor standards? Not using acceptable standards?

*Programming mistakes?

*By-passed/ disabled safeguards?

*Technical/ mechanical issues that can potentially yield the wrong result?

128– Are tests now being performed as they were intended? (Yes = good)

129 – Are/ were cases built on faulty tests? (Yes = bad)

130 – Does PM/ QA know and understand: (Yes = good)

*How to run the test calculations?

*If the coding has any built-in flaws?

131 – If relevant, are third party outside experts available to possibly analyze the testing software?

132 – Has QA discovered (or anyone reported) any undisclosed/ hidden records for/ withheld changes to:

*Test equipment?

*Test procedures?

*Equipment or process being evaluated?

133 – Are testing devices fully operational right out-of-the-box? (No = blue)

134 – Does the test lab have a written procedure to set up and test machines? (No = red)

135 – Are test records/ certifiers’ signatures faked to show instruments were tested/ calibrated but were never touched? (Yes == red)

136 – Can/ do two separate/ independent testing methods produce substantially different results? (Yes = red)

137– Do QA people know where to look and what to look for with respect to this project? (No = bad)